As your application grows, sending notifications—whether via email, SMS, or push—quickly becomes more complex. Take a simple example: when a new user signs up, your backend might call an email service directly within the signup API to send a verification code. While this works at a small scale, it doesn't scale well. With hundreds of concurrent signups, you risk hitting rate limits, experiencing timeouts, or even crashing your app. Worse, some users might get registered in the database but never receive the verification email due to a failure—leaving them stuck and unable to re-register because of unique email constraints. This tightly coupled approach can hurt both performance and user experience.

In contrast, in-app notifications are often more scalable because they don't rely on external services. As long as your internal code is efficient—using smart querying, caching, or batching—you can handle them reliably at scale. However, the moment you depend on third-party services (like email or SMS providers), you introduce potential bottlenecks and points of failure. In this blog, we’ll walk through the system design of a scalable notification system that decouples concerns, improves fault tolerance, and handles high throughput gracefully—without diving into actual code.

🧠 Understanding the Problem

Before diving into the architecture, let’s break down the requirements for a scalable notification system:

-

Handle multiple types of notifications (email, SMS, in-app, push)

-

Work asynchronously (so the main flow isn’t blocked)

-

Ensure reliable delivery with retries

-

Be fault-tolerant and loosely coupled

-

Support high throughput (thousands of notifications per second)

🏗 Designing the Solution

To handle notifications reliably at scale, we need to decouple the sending logic from the core application flow, process notifications asynchronously, and introduce fault tolerance. Here's how we can break it down:

1. Decoupling with a Message Queue

Instead of calling the notification service directly in your API (e.g., after a user signs up), publish an event to a message queue.

Example Flow:

-

✅ API handles user signup → 📥 sends a

user.signupevent to the queue → 🛠 worker processes the event and sends the verification email

Why?

-

Prevents blocking the main API

-

Absorbs spikes in traffic

-

Adds buffer and retry options in case of failures

Popular tools:

-

RabbitMQ

-

Kafka

-

Redis Streams

-

AWS SQS / Google Pub/Sub

2. Worker Services for Delivery

Create dedicated worker services (aka consumers) to handle different notification types:

-

📧

EmailWorker→ sends emails via your provider (e.g., SendGrid, SES) -

📱

SMSWorker→ handles SMS (e.g., Twilio) -

🔔

PushWorker→ push notifications (e.g., Firebase) -

🖥

InAppWorker→ stores in-app notifications in your DB

Each worker:

-

Listens for messages from the queue

-

Executes the delivery logic

-

Handles retries and logging

3. Retry Logic & Dead Letter Queues

Failures will happen—network issues, API limits, bad payloads. Use retry logic with backoff (e.g., exponential delays) and a dead-letter queue (DLQ) for persistent failures.

Best Practices:

-

Retry transient errors (timeouts, 5xx) automatically

-

Don’t retry permanent failures (like 400 errors)

-

Send failed messages to DLQ for inspection

4. Idempotency & Deduplication

Ensure your system doesn’t send the same notification twice due to retries.

Ways to achieve this:

-

Generate a unique ID per notification

-

Use Redis or database constraints to track what’s already been sent

-

Workers should be idempotent (repeated executions = same outcome)

5. Observability & Monitoring

You can’t scale what you can’t see. Add monitoring around:

-

📈 Queue length

-

✅ Success/failure rate per notification type

-

⚠️ DLQ entries or error spikes

Tools:

-

Prometheus + Grafana

-

DataDog / New Relic

-

Log aggregation with ELK or Loki

6. Scaling with Throughput in Mind

As traffic grows, you need to scale:

-

🧵 Add more worker instances (horizontal scaling)

-

⚙️ Use partitions (Kafka) or sharding (Redis) to balance load

-

💾 Optimize DB writes with batching, caching, or upserts

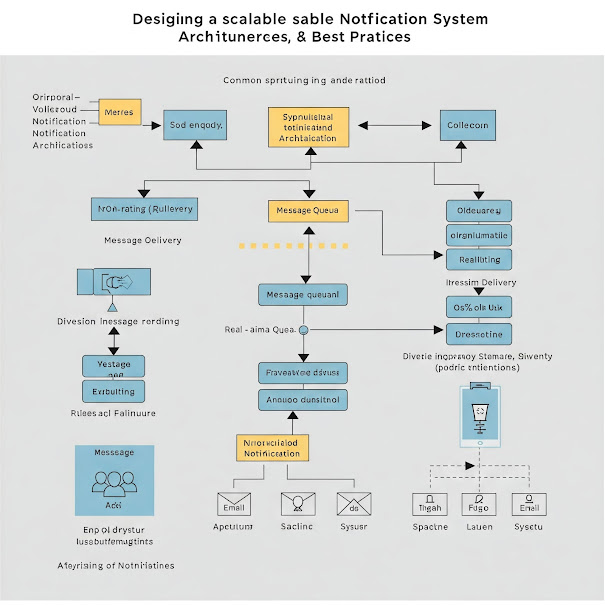

⚙️ Sample Architecture Diagram

API → Event Queue → Workers → External Services & In-App Delivery

I can make one for you.

This is a basic overview of how a scalable notification system should be structured. Now, let’s dive deeper into each component to understand how it works, the tradeoffs involved, and how to make it production-ready.

🔁 Deeper Dive: Message Queue Design & Prioritization

One of the first challenges you’ll face is that not all notifications are equal in urgency.

For example:

-

✅ A verification email should be sent immediately after signup—users can’t continue without it.

-

👋 A welcome email can be delayed by a few seconds or even minutes without harming UX.

But queues are typically FIFO (First-In, First-Out), which means if your welcome email is queued before the verification email, it could delay the critical message. To avoid this, we need a strategy for prioritizing urgent messages.

✅ Option 1: Use Multiple Queues by Priority

The simplest and most flexible approach is to separate your queues based on priority:

-

high-priority-notifications(e.g., verification emails, 2FA codes) -

low-priority-notifications(e.g., welcome emails, promotional content)

Then, deploy dedicated workers for each queue:

-

High-priority workers process fast and with more resources

-

Low-priority workers can be throttled or scaled down

This way, a backlog in one queue won’t block the other.

⚖️ Option 2: Use Priority Queues (if your queue system supports it)

Some queue systems support message-level priorities, allowing a single queue to process high-priority messages first:

-

RabbitMQ: Has built-in support for priority queues

-

SQS: Doesn’t natively support message priority, so you’d need to simulate it with multiple queues

-

Kafka: Doesn’t support priority natively; you’d need topic partitioning or consumer logic to simulate it

Drawback: Managing message-level priority inside a single queue can get complex and may not scale cleanly.

🧠 Option 3: Schedule Delayed or Background Messages

Instead of prioritizing critical messages, you can defer less critical ones. For example:

-

Send the verification email immediately

-

Schedule the welcome email to be sent 5–10 minutes later using:

-

Delayed messages (RabbitMQ plugins or Redis sorted sets)

-

A background job scheduler (like BullMQ with delayed jobs)

-

Or even a separate cron-based worker

-

This keeps your high-priority queue lean while still delivering all content.

🔑 Key Takeaway

Don’t treat all notifications the same. Use separate queues or delayed delivery to ensure critical messages get sent first—especially when scaling across thousands of users.

🛠 Deeper Dive: Worker Services for Delivery

Once your messages are in a queue, they need to be consumed and processed by dedicated workers. These are background services responsible for delivering the actual notification (email, SMS, push, etc.). How you design these workers impacts system reliability, performance, and fault tolerance.

🧩 Worker Responsibilities

Each worker should:

-

Consume messages from its assigned queue (e.g.,

high-priority-notifications) -

Process and validate the payload (e.g., ensure the email, template ID, and data are valid)

-

Call the external service (e.g., SendGrid, Twilio, Firebase)

-

Handle errors and retries

-

Log and store results (for observability or audits)

🧵 Use Dedicated Workers per Channel

Split workers by channel type to isolate failures and simplify scaling:

-

EmailWorker: Handles all email sending -

SMSWorker: Sends verification or alert messages -

PushWorker: Sends mobile push notifications -

InAppWorker: Writes to the in-app notification DB table

Benefits:

-

Failures in one channel (e.g., email provider downtime) don’t impact others

-

Allows independent scaling (e.g., you may need 10x more email workers than SMS)

-

Easier logging, monitoring, and debugging

🔁 Implement Retry Logic with Exponential Backoff

When a notification fails (e.g., network error or 500 from provider), you shouldn’t give up immediately. Instead, implement a retry strategy:

-

Exponential backoff: Retry after 1s, then 3s, then 10s, etc.

-

Retry limit: Don’t retry forever—3 to 5 times is typical

-

Separation of concern: Retry logic should be handled inside the worker, not the queue system

If retries keep failing, move the message to a Dead Letter Queue (DLQ).

🚨 Handle Irrecoverable Failures Gracefully

Some failures should not be retried:

-

Bad payload (e.g., missing email address)

-

Blacklisted numbers or addresses

-

Validation errors (e.g., invalid country code)

For these, log the error, mark the job as permanently failed, and never retry.

🔁 Idempotency & De-duplication

Workers must be idempotent, especially since retries can cause the same message to be processed more than once.

Strategies:

-

Generate a unique

notificationIdfor each message -

Store this in a DB or Redis as a processed record

-

On each run, check if the notification was already sent using that ID

This ensures:

-

No double emails

-

No billing surprises (for SMS)

-

Better UX (no duplicate push/in-app alerts)

🔍 Monitoring Each Worker

To detect issues early:

-

Track job success/failure rates

-

Monitor retry spikes

-

Alert on DLQ growth

-

Track processing time per job

Tools:

-

Prometheus + Grafana

-

Sentry for error tracking

-

Logging with Loki, ELK, or Cloud-specific tools

🧠 Pro Tip

Keep your workers stateless and self-contained. They should be replaceable, horizontally scalable, and fault-tolerant. Think of each worker like a disposable, single-responsibility microservice.

0 Comments